|

Today we’re announcing AWS Parallel Computing Service (AWS PCS), a new managed service that helps customers deploy and manage high-performance computing (HPC) clusters to run their workloads asynchronously on any AWS scale. Using Slurm scheduling, they can work in a familiar HPC environment, accelerating their time to results instead of worrying about infrastructure.

In November 2018, we released AWS ParallelCluster, an AWS-supported open source cluster management tool that helps you deploy and manage HPC clusters in the AWS Cloud. With AWS ParallelCluster, customers can also quickly build and deploy proof-of-concept and production HPC computing environments. They can use the AWS ParallelCluster command-line interface, API, Python library, and user interface installed in open source packages. They are responsible for updates, which may include tearing and reorganizing groups. Many customers, though, have asked us for a fully managed AWS service to eliminate the operational tasks of building and operating an HPC environment.

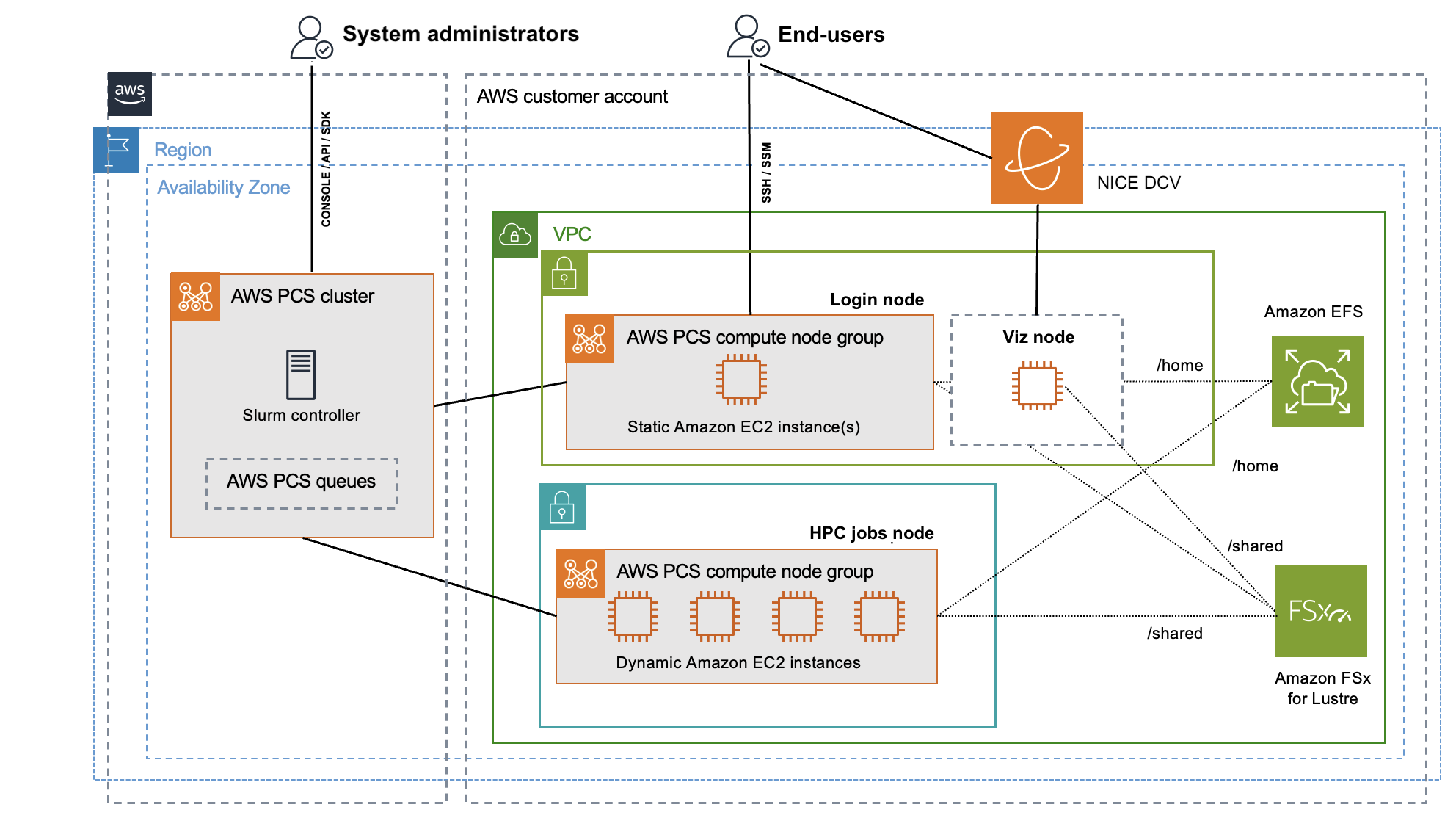

AWS PCS simplifies HPC environments managed by AWS and is accessible through the AWS Management Console, the AWS SDK, and the AWS Command-Line Interface (AWS CLI). Your system administrators can create managed Slurm groups that use compute and storage configuration, authentication, and role selection. AWS PCS uses Slurm, a fault-tolerant job scheduler, used for scheduling and processing simulations. End users such as scientists, researchers, and engineers can access the AWS PCS cluster to manage and manage HPC jobs, use interactive software on virtual desktops, and access data. You can quickly bring their workloads to AWS PCS, without putting significant effort into porting code.

You can use NICE DCV’s fully managed remote desktops to remotely view, log job telemetry or application logs to enable experts to manage your HPC workflow in one place.

AWS PCS is designed for a variety of traditional and emerging, computational or data-intensive, engineering and scientific workloads in areas such as computational fluid dynamics, climate modeling, finite element analysis, electronic design tools, and simulation of reserves using known methods of preparation, execution, and analysis of simulations and calculations.

Get Started with AWS Comparison Service

To test AWS PCS, you can use our tutorial to create a simple group of AWS documents. First, you create a virtual private cloud (VPC) with AWS CloudFormation and shared storage with Amazon Elastic File System (Amazon EFS) in your AWS Region account where you will test AWS PCS. To learn more, visit the Create a VPC and create shared storage with AWS documentation.

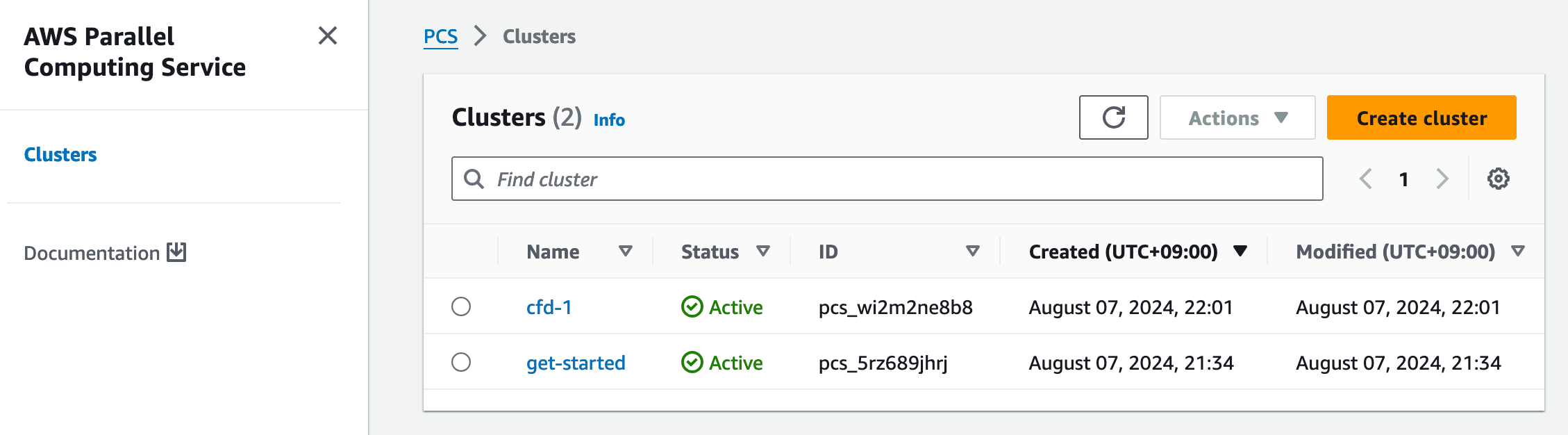

1. Form a group

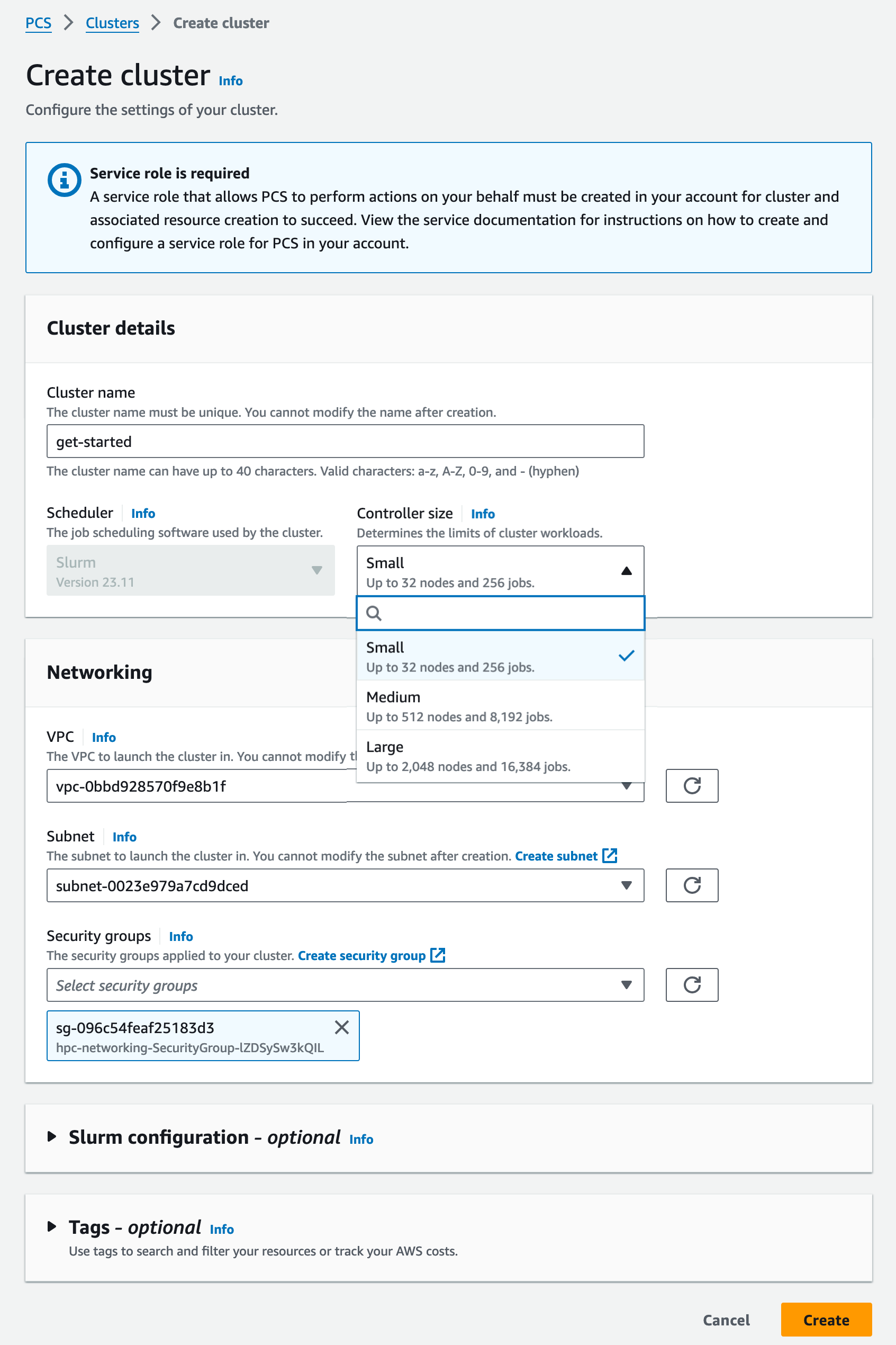

In the AWS PCS console, select Create a groupContinuous resource management and workload management.

Next, enter your group name and select the size of your Slurm calendar controller. You can choose Yar (up to 32 nodes and 256 jobs), Medium (up to 512 nodes and 8,192 functions), or Great (up to 2,048 nodes and 16,384 jobs) for the workload limit of the cluster. Inside The connection section, select your created VPC, the subnet to start the cluster, and the security group to apply to your cluster.

Optionally, you can set Slurm configuration such as idle time before computing nodes will slow down, Index of Prolog and Epilog scripts for which computing nodes are started, and parameter selection of algorithm resources used by Slurm.

Choose Create a group. It takes some time for the cluster to be rendered.

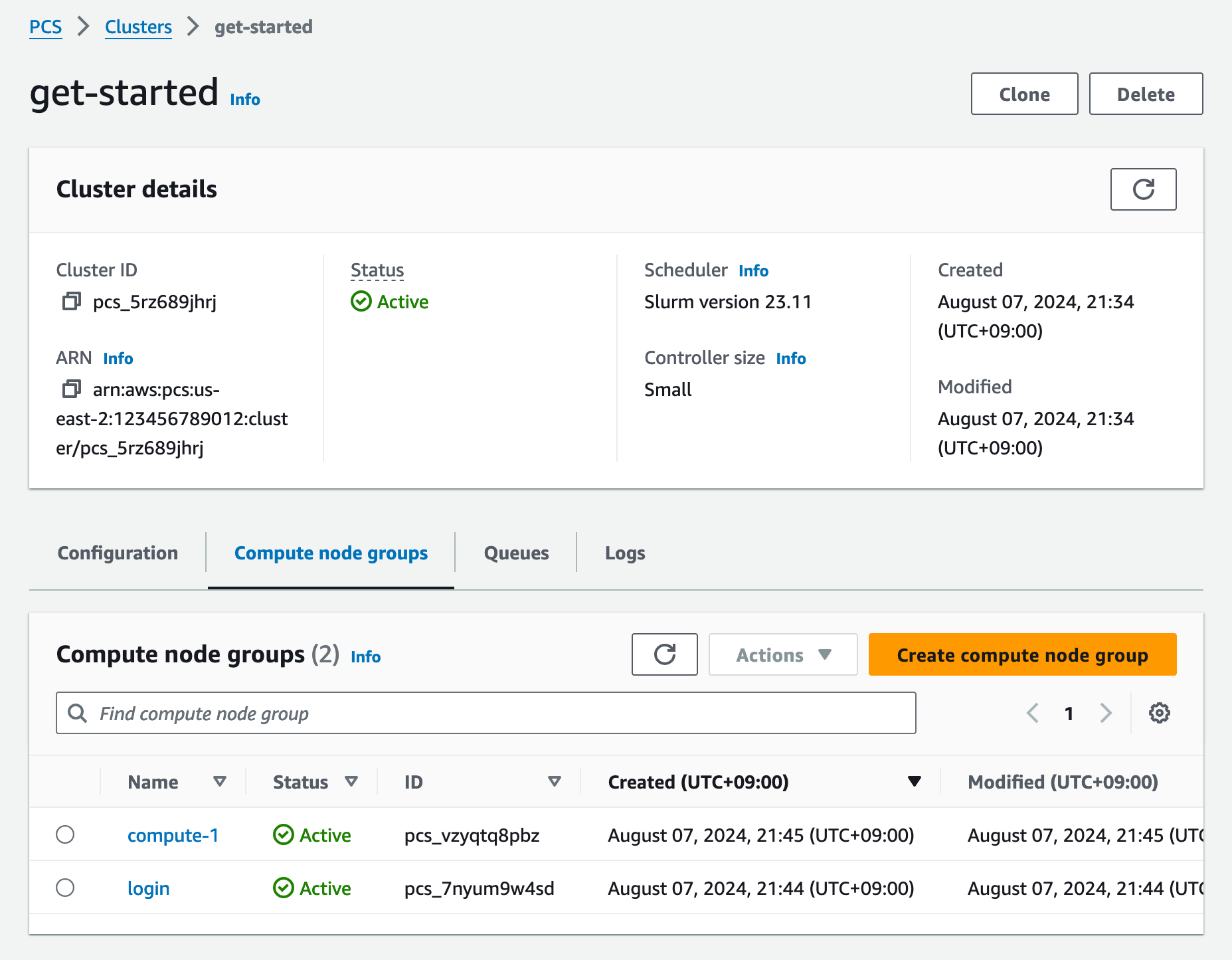

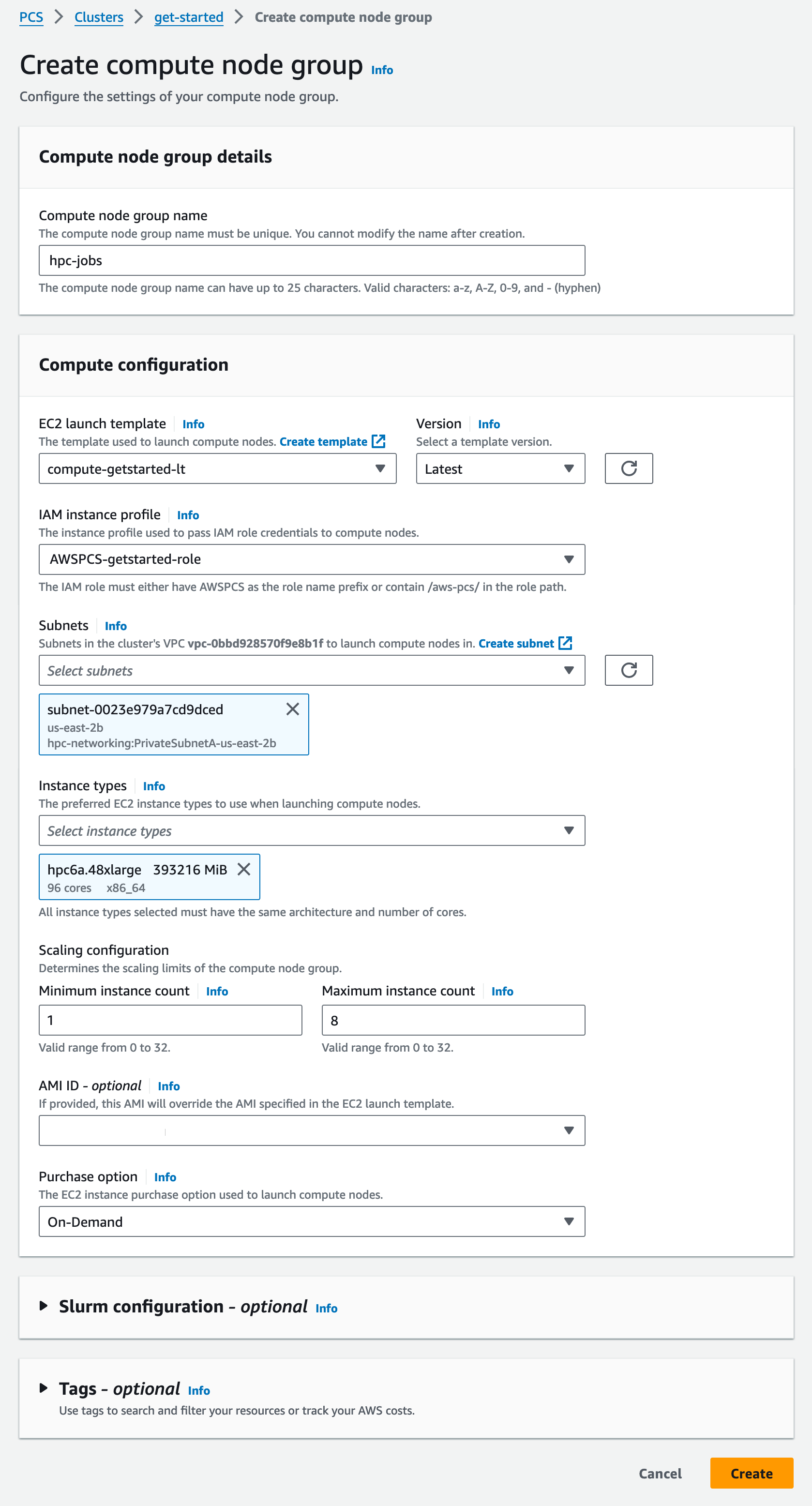

2. Create compute node groups

After creating your cluster, you can create computer clusters, virtual collections of Amazon Elastic Compute Cloud (Amazon EC2) that AWS PCS uses to provide interactive access to a cluster or to run cluster jobs. When you define a compute cluster, you define common attributes such as EC2 instance types, minimum and maximum instance counts, target VPC subnets, Amazon Machine Images (AMI), purchase options, and custom startup configurations. Clusters of compute nodes need an instance to pass to the AWS Identity and Access Management (IAM) instance of the EC2 instance and the EC2 framework that AWS PCS uses to configure the EC2 instance to launch. To learn more, visit the Create a startup framework and create an instance profile in the AWS documentation.

To create an account group in the console, go to your group and select those Calculate the node groups tab and Create a compute node group button.

You can create two computer groups: a node group for access by end users and a worker node group to manage HPC jobs.

To create a computer cluster that runs HPC jobs, enter a computer name and select a pre-created EC2 startup configuration, IAM profile, and subnets to start computing nodes in your VPC cluster.

Next, select the EC2 instance types you prefer to use when launching compute nodes and count the minimum and maximum instance sizes. I chose hpc6a.48xlarge Example type and scale are limited to eight instances. For the entry node, you can select a small instance, such as one c6i.xlarge for example. You can also choose one of them Ordering or Learn it Option to purchase EC2 if the instance type supports it. Optionally, you can select a specific AMI.

Choose Create. It takes some time for the node computing group to be provided. To learn more, visit Create an account group to manage jobs and Create an account group to log nodes in AWS documentation.

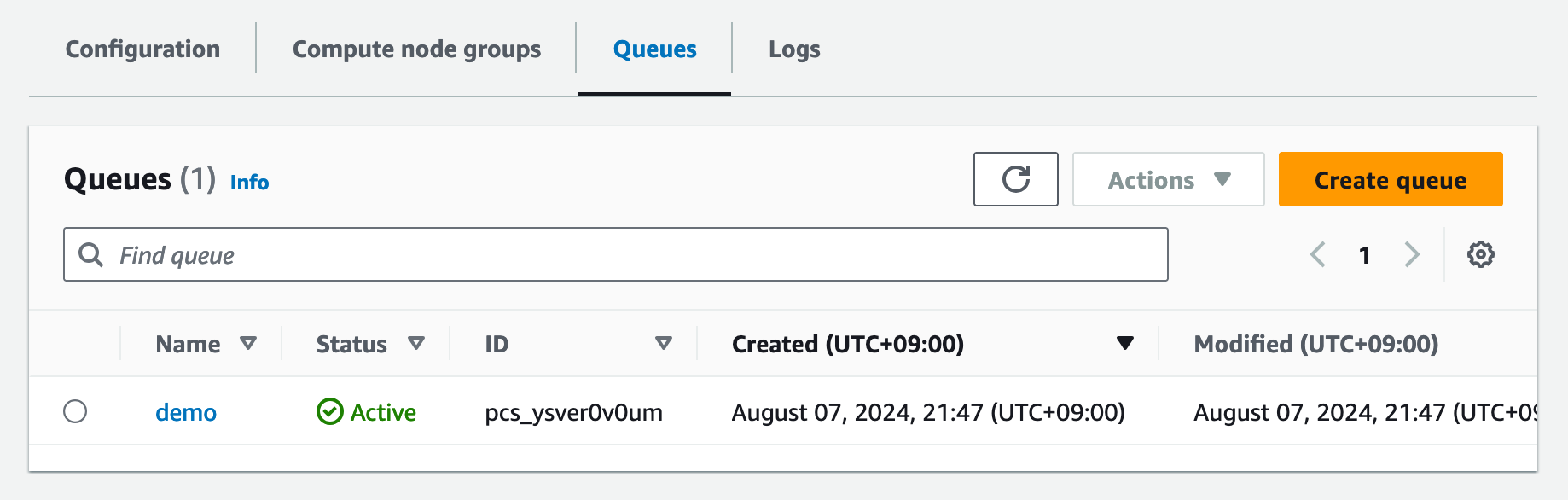

3. Create and run your HPC jobs

After you create your account groups, you submit a queue job for management. The job remains in the queue until AWS PCS schedules it to run on a cluster of nodes, based on available capacity. Each row is connected to one or more compute groups, which provide the necessary conditions for EC2 to perform processing.

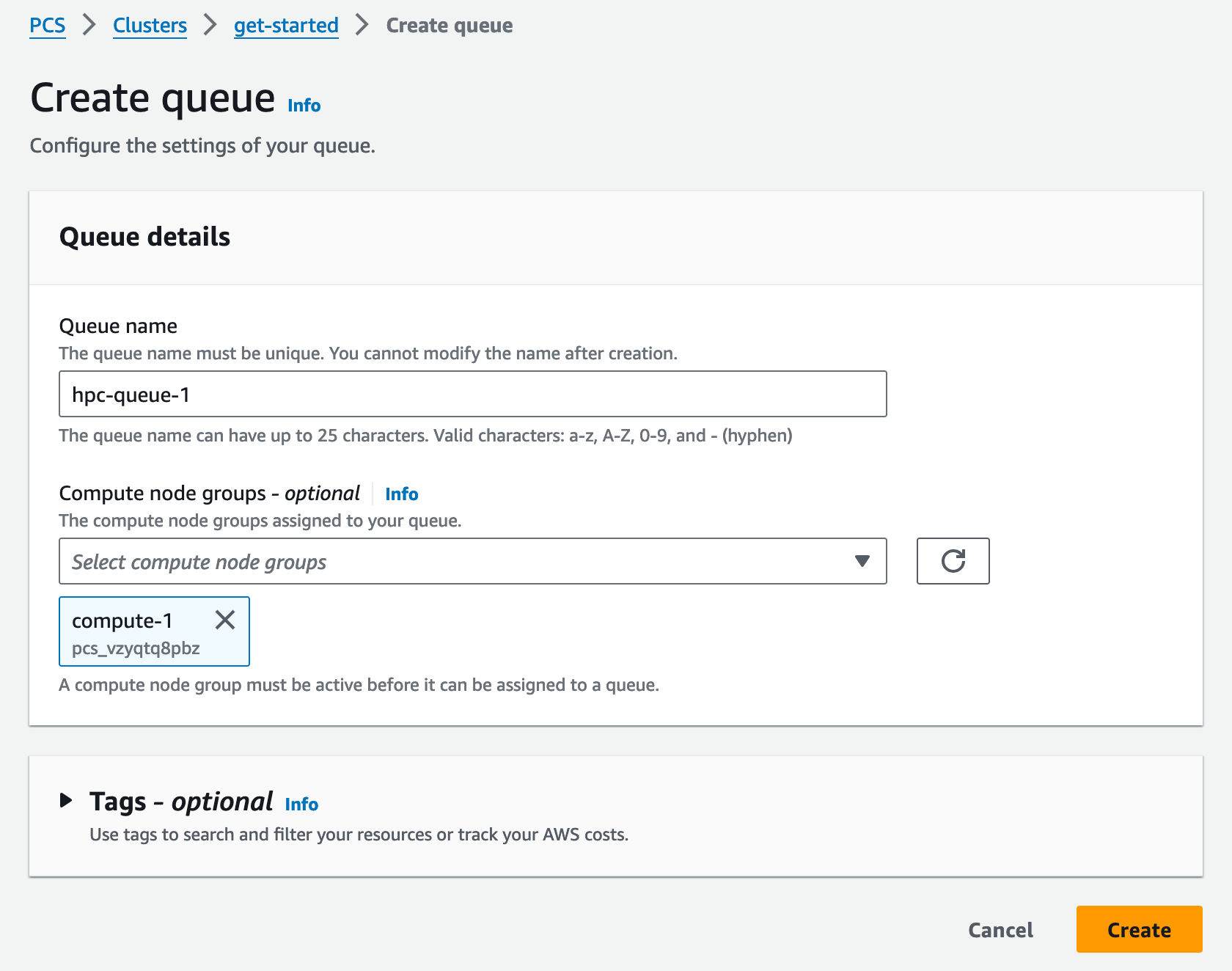

To create a queue in the console, go to your group and select this Rows tab and Make a row button.

Enter your queue name and select your account groups assigned to your queue.

Choose Create and wait while the queue is created.

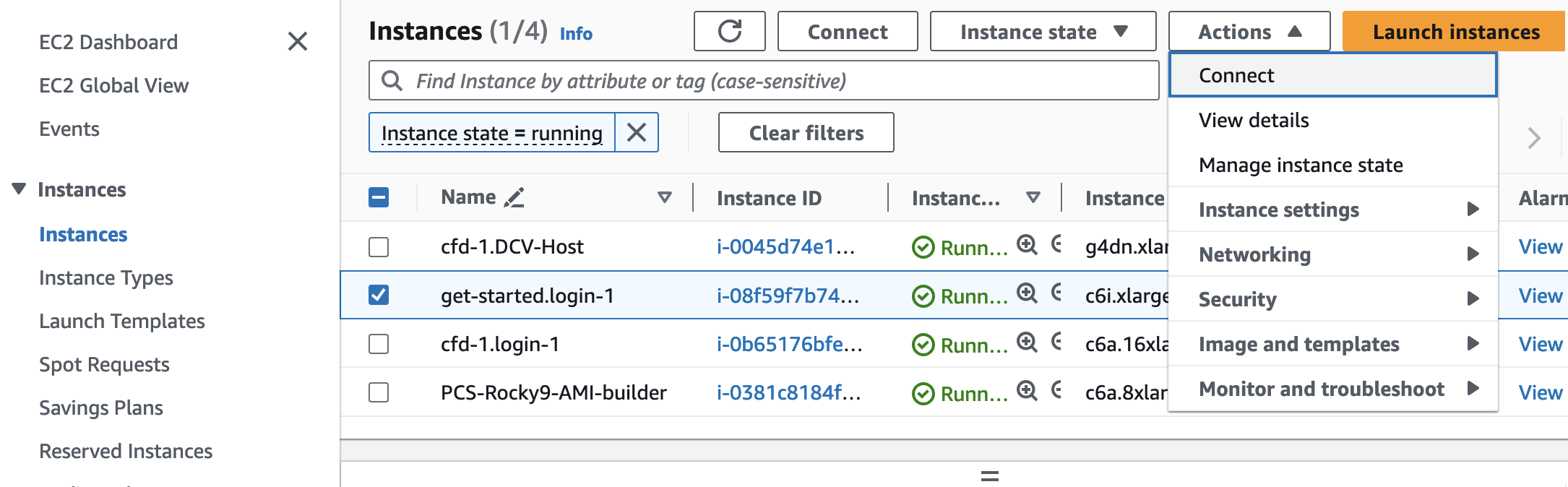

Once the login computer cluster is active, you can use AWS Systems Manager to connect to the EC2 instance it created. Go to the Amazon EC2 console and select your EC2 instance in the node compute cluster To learn more, visit Create a queue to submit and manage jobs and connect your cluster to AWS documents.

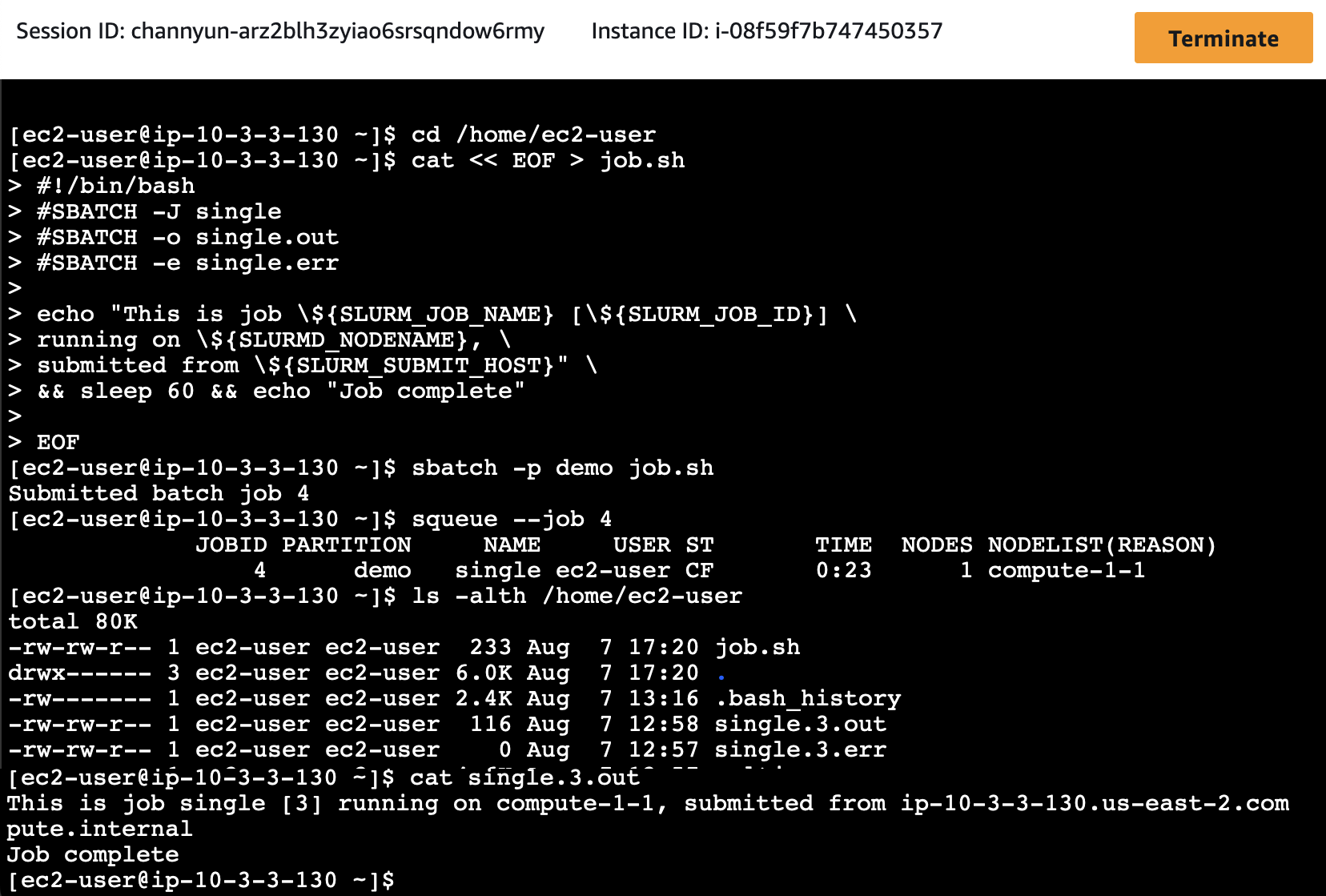

To manage a job using Slurm, you prepare a submission document that describes the job requirements and submit it to the queue. sbatch command. Typically, this is done in a shared directory so that the access and compute nodes have a common access point for files.

You can also run a message processing (MPI) function on AWS PCS using Slurm. To learn more, visit Run a single-node job with Slurm or Run a multi-node MPI job with Slurm in the AWS documentation.

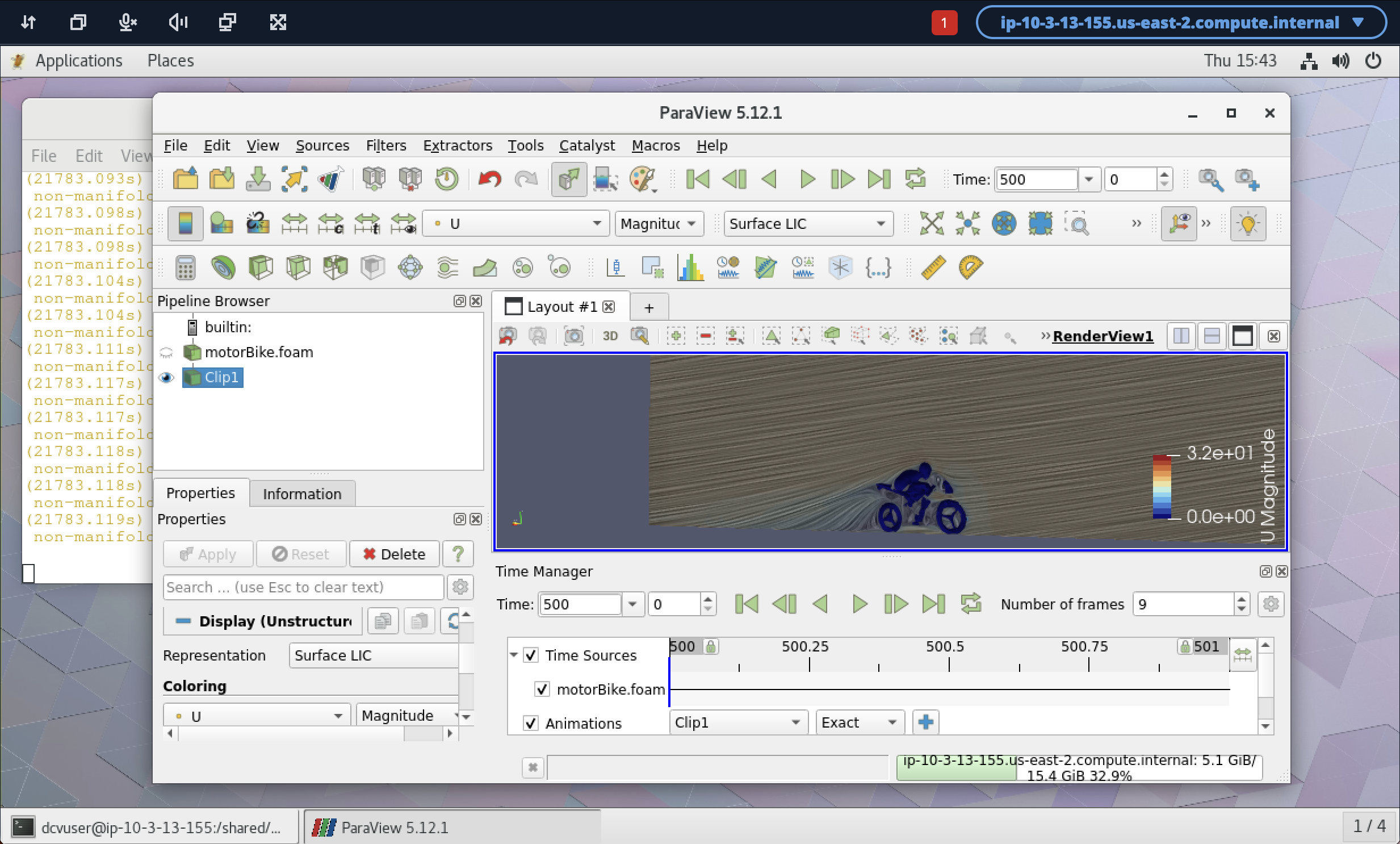

You can connect to NICE DCV’s fully managed remote desktop to view. To get started, use the CloudFormation template for HPC Recipes in the AWS GitHub repository.

In this example, I used the OpenFOAM motorcycle simulation to calculate the steady flow around the motorcycle and the rider. This simulation was run on 288 cores in three hpc6a instances. The output can be viewed in a ParaView session after logging into the DCV instance.

Finally, after you are done with the HPC jobs on the cluster groups and nodes that you have created, you should delete the resources that you have created to avoid unnecessary costs. To learn more, visit Delete your AWS resources in the AWS documentation.

Things to know

Here are a few things you should know about this feature:

- Types of slurm – AWS PCS initially supports Slurm 23.11 and provides mechanisms designed to enable customers to update their major versions of Slurm when new versions are added. Additionally, AWS PCS is designed to automatically update the Slurm controller with patch versions. To learn more, visit the Slurm versions of AWS documentation.

- Power Reservation – You can reserve EC2 capacity in a specific location and for a specific period of time using On-Demand Capacity Reservation to ensure that you have the necessary computing power available when you need it. To learn more, visit Capacity Storage in AWS documentation.

- Network file systems – You can mount network storage volumes where data and files can be written to and accessed, including Amazon FSx for NetApp ONTAP, Amazon FSx for OpenZFS, and Amazon File Cache as well as Amazon EFS and Amazon FSx for Luster. You can also use self-managed volumes, such as NFS servers. To learn more, visit the web file systems in AWS documentation.

Available now

AWS Parallels Service is now available in US East (N. Virginia), AWS US East (Ohio), US West (Oregon), Asia Pacific (Singapore), Asia Pacific (Sydney), Asia Pacific (Tokyo), Europe ( Frankfurt ), Europe (Ireland), Europe (Stockholm) Regions.

AWS PCS manages all resources in your AWS account. You will be billed accordingly for those resources. For more information, see the AWS PCS Pricing page.

Try and send feedback to AWS re: Post or through your usual AWS Support contacts.

– Channy

PS Special thanks to Matthew Vaughn, principal advocate at AWS for his help in creating the HPC test environment.

#Announcing #AWS #Parallel #Computing #Service #run #HPC #workloads #scale #Amazon #Web #Services